By Matthew A. Griffin, MLIS, Dan Albertson, Ph.D., and Angela B. Barber, Ph.D.

Introduction

A prototype video digital library was developed as part of a partnership between primary care medical clinics and the University of Alabama, a project funded by the National Network of Libraries of Medicine, Southeastern/Atlantic Region (NNLM SEA). This article summarizes the current functionality of a prototype video digital library aimed at supporting physicians treating potential autism cases and describes the developmental processes and implemented system components.

Caregivers of children who fail an autism screener at the primary care clinics partnering with this project may be asked if trained staff at the clinic can conduct a video-recorded structured play-sample for further analysis at the University of Alabama Autism Spectrum Disorders (ASD) Clinic. These videos (children playing) can be uploaded to the video digital library, which in turn makes them available to the ASD Clinic, where teams of experts can select full play-sample videos to examine within a secure area of the video digital library. Observations, scores, and other clinical notes can be attached or appended to the video (i.e. annotated) using an integrated version of the Childhood Autism Ratings Scale-Second Edition Standard Version (CARS2). The video digital library then uses evaluation data and other input from autism experts to segment the full videos, which would otherwise be inefficient for a child’s primary care physician to use within the context of patient care or to navigate to the meaningful observations within a video.

Researchers in this ongoing project anticipate that physicians will be able to use the processed shorter video clips, generated from the full play-sample videos, and corresponding embedded feedback from autism experts, to make decisions about patient care. The digital library ultimately enables physicians and autism experts alike to search and browse usable individual video clips of patients by score, test type, gender, age, and other attributes, using an interface designed around the envisioned users (i.e. health professionals) and basic Human Computer Interaction (HCI) metrics. Having the processed video clips accessible through the digital library allows physicians to compare and contrast different patients and observations across a larger video collection, which can, ultimately, enhance understanding about autism.

The video digital library is a secure, Web accessible, video retrieval system that uses an Apache Web server and SSL certificates for information transmission over hypertext transfer protocol (HTTPS). PHP, MySQL, JavaScript, JQuery, Python, and FFmpeg are the current developmental tools used for implementation and ongoing maintenance of the video digital library:

- MySQL is the database system of the video digital library.

- PHP enables database connectivity and added security.

- Python programs execute the video processing functions of the video digital library. The Python functions segment and index the contributed video files by handling the naming and iterative functions.

- FFmpeg manipulates the video files.

Details of the video library’s implementation is presented in this article, along with plans for future development, which will further examine certain characteristics of clinical videos for improving search, browse, and application of video as a resource in the context of patient care. Technical innovations of this project include the design of user interfaces to collect, order, and effectively present different information formats for supporting clinical tasks and decision-making.

Key Innovations of Video Digital Library

In this section, two important pages of the digital library with unique interface features will be detailed. The design and functionality of the annotation and search pages contain key innovations of the video digital library.

Annotation Page

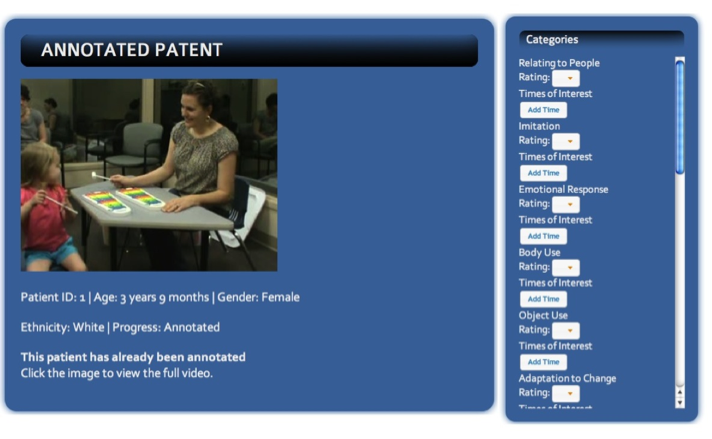

The annotation page enables experts of the UA ASD Clinic to score children and their behaviors while watching a video play session in the digital library. This page incorporates a digital adaptation of the Childhood Autism Ratings Scale-Second Edition Standard Version (CARS2) for conducting patient assessments (Schopler, et. al., 2013)]. Definitions of scores and categories were inserted by the developers into the annotation page so that the autism experts could quickly reference the meanings of each score across all categories. Each category (e.g. imitation, object use, relating to people, etc.) of the CARS2 has a function that allows the autism experts to indicate up to three time points, along with an accompanying notes field for times indicated. The autism experts, using the video digital library, manually input time points in mm:ss format and input text in the notes field. The form does not allow for saving drafts; however, since the autism expert must watch the full video to conduct assessments and complete the annotation form, this was deemed to not be a necessary function. Once the form is submitted, a record for the video is transmitted to the database, and the full video is marked for processing by programs used to extract the video clips. Full videos are processed, and individual clips of notable tests are extracted and created. Therefore, corresponding search results of individual clips are available on the site within 15 minutes of the annotation form being completed and submitted.

Figure 1. Screen capture of the annotation page.

Search Page

The search functions of the video digital library comprise the other innovative page. First, a user can narrow search results dynamically by using the PicNet table filter (Tapia G … [updated 2013]), described further below. The filter creates a dropdown menu that the user can use to limit the search results by hiding the content of filtered table elements. In addition to the dynamic content, such as age or patient ID being filtered, the static definitions of the scores displayed can be refined by keyword search. All limiters of the PicNet table filter can be used in combination with each other to limit results shown. In the event of a system error, the Clear Selection button placed at the top of the results page will refresh and initiate a new session.

As important as these two pages are for users of the video digital library, the overall functionality is dependent on its systematic ability to segment video files, i.e. extract video clips.

Figure 2. Screen capture of the search page with PicNet filter.

Development of Video Digital Library

The video digital library is a new application that relies on a number of open source tools. Open source tools used for implementation, other than those briefly mentioned above, are described in this section.

Open Source Tools Used

With the assortment of processes required to build this interactive video digital library, the primary developer looked for open source tools that would provide the means to develop and implement the needed functionality. The Smarty template engine design formed the building blocks for the individual webpages (Basic Syntax … [updated 2013]). Smarty “tags” allow variables to be securely shared across multiple webpages, which helped avoid having to store variables in the address bar or in cookies. Smarty tags also provide the compartmentalization of the development process, and the Smarty template engine permits each page, specifically the annotation and search pages, to have the required flexibility of unique programming and functionality.

Adminer (formerly phpMinAdmin), an open source program, is used as a database management tool. Adminer minimalized installation steps and requirements (given it is a single PHP file) and provided a clean interface for the database manager. PicNet table filter, as presented above, provided an open source solution for the search page, providing the ability to filter results once the main search query is submitted. PicNet was developed with jQuery, and thus can dynamically change the results displayed through keyword or dropdown menu. Having the ability to search and browse by both known and exploratory criteria is crucial for a digital library type of retrieval tool.

Security

Security of the video digital library was a significant consideration throughout development and implementation; several security measures and methods were implemented. These barriers were designed to make it unlikely that an unauthorized user or program could access information, such as the videos, clinic notes, and scores, from the video digital library. The primary security features included the PHP templates to hide webpage extensions in an attempt to camouflage the language in which webpages were written. In addition, secure sessions were also implemented, which were tested with several hacking scenarios by the primary developer. These tests helped suggest several security approaches and enhancements. One specific change, implemented by the primary developer, was the addition of a conditional statement to test the user’s IP-address, saved from the current login session. The temporarily saved IP-address will block a user if their IP-address changes during a session. When this happens, the user is presented with a login screen for authorized users to reenter their credentials.

Video being the most important aspect of the video digital library, we used caution in designing the mechanism for delivering video. We decided to use Flash early on in development process so that older web browsers would be able to play the video. But without a video streaming server, Flash would download a copy of the video to the user’s computer causing a serious security issue for the video digital library. We researched several methods to provide video streaming for the video digital library, and module H264 was found to fit our needs. After installing H264 several changes to the coding were necessary. This was an easy task due to the video digital library referencing one function in order to play video. Making the necessary changes there allowed the H264 module to function for the entire video digital library. Full directions on H264 available from http://h264.code-shop.com/trac.

To prevent SQL injection during user searches, the system uses PHP Data Objects (PDO) extensions (Achour M … [updated 2013]). PDO extension is a database driver for PHP that is database specific. For this project we used PDO_MYSQL, but several others are available at: www.php.net/manual/en/pdo.drivers.php

PDO also cleans any SQL queries of any trouble caused by characters such as: ‘)’, ‘ ;’, ‘}’, and ‘]’. The example code below shows how we used PDO to insert records into the database.

$database;

try {

$database = new PDO('mysql:host=127.0.0.1;dbname=`database_name`, `user`, `password`); //Solution to connection error by adding these following lines.

$database -> setAttribute( PDO::ATTR_DEFAULT_FETCH_MODE, PDO::FETCH_ASSOC );

$database -> setAttribute( PDO::ATTR_PERSISTENT, true );

} catch (PDOException $error) {

$database = new PDO('mysql:host=localhost;dbname=`database_name`, `user`, `password`);

$database -> setAttribute( PDO::ATTR_DEFAULT_FETCH_MODE, PDO::FETCH_ASSOC );

$database -> setAttribute( PDO::ATTR_PERSISTENT, true );

}

Figure 3. Connection to the database using PDO

//prep the query to the database the appropriate info about clips $query = $database->prepare(<<<HD INSERT INTO clips (sessionsID, patientID, ratingID, path, key_frame) VALUES (:sessionID, :patientID, :ratingID, :path, :key_frame); HD );

Figure 4. Preparing database query using PDO

//load the clean variables and write queries to the database $successful &= $query->execute(array( ':sessionsID' => $_SESSIONS['sessionsID'], ':patientID' => $patientId, ':ratingID' => $ratingID, ':path' => "$/$filename.mp4”, ':key_frame' => "$/$filename.jpg”, ':user_id' => $_SESSION['userID’] ));

Figure 5. Writing to the database using PDO

As seen above, the PDO function was called to connect to the database and use the :table_field => $php_variable to insert each record. This approach prevents a user from conducting a search for “Child play; TRUNCATE TABLE patient;” and causing everything in the patient’s table to be erased.

Video Processing

The program to automatically segment the video into individual clips required multiple iterations, or versions. One such version did not use Python, but instead called FFmpeg directly from PHP. However, in order to quickly develop a script to control for file naming and check for file creation, we used Python. The main function of the Python script is to call FFmpeg for the clip creation and keyframe extraction, from the clips, along with creating filenames and file paths.

Clip Extraction

FFmpeg enables video clip extraction. The correct syntax to create a usable video file was discovered by trial and error. The best solution was to use the application Hand Brake, which uses FFmpeg to process video. As a function of the software, HandBrake displays the command line parameters used when converting video. The resulting code from HandBrake is “ffpmeg, ‘-y’,’-i’, filename,’-vcodec’,’libx264’,’-s’,’320×240’…” this is then adapted to use in the python script as seen below.

def cut(movie, start, clip_name): #calls FFmpeg to slice video

if(subprocess.Popen(['../FFmpeg', '-y', '-i', movie, '-ss', str(start), '-t', '15', "-vcodec", "libx264", "-s", "320x240", "-crf", '20', "-async", '2', "-ab", "96", "-ar", "44100", clip_name])):

sleep(120) #solves racing condition when clips are long

Figure 6. Primary Python function

As seen in the comments of the code, a race condition was discovered late in the development process, and the quick fix was to add a wait command. It is bad practice to depend upon a wait command to fix a bug. However, as this script is running on the server, the user will never be aware of this wait time. In addition, this fix appears to be one of the few ways to fix this race condition known as, “time of check to time of use,” or TOCTTOU. TOCTTOU explains the race condition which occurs in the fraction of a second between the check for a file and the use of that file. The race condition is in that fraction of a second when the file can be deleted, moved, or accessed by another program causing the file to be inaccessible (Mathias et. al 2012). Since this is not a unique problem to the Python language, the effect can only be mitigated (Pilgrim M … [updated 2013]). The race condition is compounded by the twelve ranking categories, each one having the possibility of three video clips being created. So, at most, thirty-six video clips and keyframes are created. Having the program wait was the best solution, as there is no reliable method to check that a file is not in use or readable prior to calling FFmpeg.

Keyframe Extraction

Keyframes are individual frames, i.e. still images, used to represent the visual contents of a video to a user through a user interface. For this project, once a video clip is created, a keyframe (i.e., visual surrogate) is needed to represent the clip to users in the search results page. The keyframes as extracted and employed for the video digital library are stored as jpegs in order to keep file size down. Keyframes are selected by simply choosing and extracting the middle frame number from a video clip and designating that as the visual surrogate. For example, if a clip has 100 frames, approximately the 50th frame in the clip would be extracted and designated as the “keyframe.” This keyframe selection approach has proven to be just as effective as other more complex image processing approaches, previously evaluated for detecting the “best” keyframe through content-based comparisons. Extracting the middle frame from the video was made a bit more complex in this context since there was no control over the length of the video uploaded to the website. The best solution was redirecting the standard output of FFmpeg using the parameter ‘stdout = PIPE”, shown in the following code sample. The output contains detailed information of the video file created by FFmpeg. Another function writes the output to a text file in order to generate the duration of the video. Extracting duration is detailed below.

def Logfile(clip_name):

result = subprocess.Popen(['../FFmpeg', '-i', clip_name], stdout = PIPE, stderr = STDOUT)

return [result.stdout.readlines()]

Figure 7. Python function for redirecting the standard output of FFmpeg.

b'FFmpeg version N-43574-g6093960 Copyright (c) 2000-2012 the FFmpeg developers\n' b' built on Aug 15 2012 05:18:13 with gcc 4.6 (Debian 4.6.3-1)\n' b" configuration: --prefix=/root/FFmpeg-static/64bit --extra-cflags='-I/root/FFmpeg-static/64bit/include -static' --extra-ldflags='-L/root/FFmpeg-static/64bit/lib -static' --extra-libs='-lxml2 -lexpat -lfreetype' --enable-static --disable-shared --disable-ffserver --disable-doc --enable-bzlib --enable-zlib --enable-postproc --enable-runtime-cpudetect --enable-libx264 --enable-gpl --enable-libtheora --enable-libvorbis --enable-libmp3lame --enable-gray --enable-libass --enable-libfreetype --enable-libopenjpeg --enable-libspeex --enable-libvo-aacenc --enable-libvo-amrwbenc --enable-version3 --enable-libvpx\n" b' libavutil 51. 69.100 / 51. 69.100\n' b' libavcodec 54. 52.100 / 54. 52.100\n' b' libavformat 54. 23.100 / 54. 23.100\n' b' libavdevice 54. 2.100 / 54. 2.100\n' b' libavfilter 3. 9.100 / 3. 9.100\n' b' libswscale 2. 1.101 / 2. 1.101\n' b' libswresample 0. 15.100 / 0. 15.100\n' b' libpostproc 52. 0.100 / 52. 0.100\n' b"Input #0, mov,mp4,m4a,3gp,3g2,mj2, from '/videos/demo01/1/1/3.mp4':\n" b' Metadata:\n' b' major_brand : isom\n' b' minor_version : 512\n' b' compatible_brands: isomiso2avc1mp41\n' b' encoder : Lavf54.23.100\n' b' Duration: 00:00:15.03, start: 0.000000, bitrate: 522 kb/s\n' b' Stream #0:0(und): Video: h264 (High) (avc1 / 0x31637661), yuv420p, 320x240 [SAR 1:1 DAR 4:3], 385 kb/s, 29.97 fps, 29.97 tbr, 30k tbn, 59.94 tbc\n' b' Metadata:\n' b' handler_name : VideoHandler\n' b' Stream #0:1(und): Audio: aac (mp4a / 0x6134706D), 44100 Hz, stereo, s16, 128 kb/s\n' b' Metadata:\n' b' handler_name : SoundHandler\n' b'At least one output file must be specified\n'

Figure 8. Raw output of FFmpeg as written in the text file.

The script iterates over each line of the FFmpeg output, as seen above, checking for the string “Duration” and then extracts the duration of the full video by slicing the string. A conditional test for duration and using string slicing calls the process for retrieval of the full duration video. The appropriate timing to extract the keyframe was computed by converting the given duration of a clip into seconds and dividing it by two. With the middle of the clip calculated, Python names the clip iteratively with the regular expression (‘%s’ % (count)). The clip number or file name matches the resulting keyframe name. For example, Python names the video full_video, then each extracted video clip numbered sequentially (e.g. 1.mp4, 2.mp4). The filename for the keyframe matches that of the video clip with a .jpg file extention. The full video, extracted clips and representing keyframes for each submitted play sample are all saved in a separate folder. The file path for each play sample (i.e. session for a child) is built using the patient ID and the session ID. For example, the path for the second session, “play sample,” of Patient 1 would be ‘/data/1/2’. Using Python in conjunction with FFmpeg was challenging, but the end result is a digital library application able to process video and extract shorter clips according to input from the autism experts.

Future Development

At the conclusion of the primary developmental stages, system developers compiled a list of tools that would further enhance implementation and functionality of the video digital library. The annotation and search pages, discussed above, were notably complex to design and implement. Future development of the system may potentially benefit by incorporating AJAX (Asynchronous JavaScript and XML) for making the annotation form more dynamic and user friendly, considering AJAX allows webpage content to change and update without reloading the full webpage (AJAX Tutorial … [updated 2013]).

One obstacle remains for any future development of this system. When patient age is used to search for clips, several clips are returned, but one of the clips returned does not match search criteria. It is not an error with the database entry and must be an error in the PHP code. Further development of the search page is at the top of the developers’ priorities for future development due to the importance of being able to search the digital library using different user criteria. Search is further complemented by browse functions, e.g. for browsing search results, which is significant for visual collections, as users expect visual feedback and surrogates to peruse a collection and to base relevance judgments.

Conclusion

Open source tools and platforms were combined to make a stable, secure, and innovative video digital library. Lessons learned from the development of this system motivate future research and experimentation.

This project demonstrates potential for enhancing clinical services in underserved areas. Furthermore, the prototype video digital library can be used to streamline patient assessments by autism experts and provide enhanced information back to the patient’s primary physician for making clinical decisions. Future research with this project can also employ the prototype presented here to examine how video digital libraries enhance understanding of autism among physicians and physician/caregiver communications. In addition, findings from future research may lead to health literacy centered designs of video digital libraries.

References

Achour M, Betz F. PHP Manual [Internet]. [Updated 2013 Nov 15]. www.php.net.; [cited 2013 Nov 17]. Available from: http://www.php.net/manual/en/ Back

AJAX Tutorial [Internet]. [Updated 2013 Nov 17]. www.w3schools.com.; [cited 2013 Nov 17]. Available from: http://www.w3schools.com/ajax/ Back

Bellard F. FFmpeg Documentation [Internet]. [Updated 2013 Nov 17]. www.ffmpeg.org.; [cited 2013 Nov 17]. Available from: http://www.ffmpeg.org/documentation.html

Basic Syntax [Internet]. [Updated 2013 Nov 13]. www.smarty.net.; [cited 2013 Nov 13].

Available from: http://www.smarty.net/docs/en/language.basic.syntax.tpl Back

Mathias P., Gross T. Protecting Applications Against TOCTTOU Races by User-Space Chacing of File Metadata. 2012. Acm Sigplan Notices; 47(7):215-226. Available from: http://libdata.lib.ua.edu/login?url=http://search.ebscohost.com/login.aspx?direct=true&db=edswsc&AN=000308657200020&site=eds-live&scope=site Back

Pilgrim M. 2009. [Internet]. 2. Apress.; [cited 2013 Nov 17]. Available from: http://www.diveinto.org/python3/ Back

Schopler E, Van Bourgondien M. Childhood Autism Rating Scale, Second Edition (CARS2) [Internet]. [Updated 2013 Nov 15]. Pearson.; [cited 2013 Nov 15] . Available from: http://www.pearsonclinical.com/psychology/products/100000164/childhood-autism-rating-scale-second-edition-cars2.html. Back

Tapia G. PicNet Table Filter [Internet]. [Updated 2013 Nov 17]. www.picnet.com.au.; [cited 2013 Nov 17]. Available from: http://www.picnet.com.au/picnet-table-filter.html Back

About the Authors

Matthew A. Griffin is a first year doctoral student in the College of Communications and Information Science, University of Alabama where he recently received an MLIS. Matthew is interested in researching digital libraries as part of his doctoral program.

Dan Albertson is an Associate Professor in the School of Library and Information Studies, University of Alabama. His work can be found in places such as the Journal of the American Society for Information Science and Technology, Journal of Documentation, Journal of Information Science, Journal of Education for Library and Information Science, and others. Dan’s primary research interest is interactive information

retrieval; he holds a Ph.D. in Information Science from Indiana University, Bloomington.

Angela Barber is an Assistant Professor in the Department of Communicative Disorders, University of Alabama. Her research focuses on early identification and intervention with young children who have autism. She holds a Ph.D. In Communication Disorders from Florida State University.

Subscribe to comments: For this article | For all articles

Leave a Reply