Introduction

One application of Artificial intelligence (AI) in libraries is its potential to assist in metadata creation for images by generating descriptions, titles, or keywords for digital collections. Many AI options are available, ranging from cloud-based corporate software solutions, including Microsoft Azure Custom Vision and Computer Vision, and Google Cloud Vision, to open-source locally hosted software packages like OpenCV and Sheeko. This case study examines the feasibility of deploying the open-source, locally hosted AI software, Sheeko (https://sheeko.org/), and the accuracy of the descriptions generated for images using two of the pre-trained models. The study aims to ascertain if Sheeko’s AI would be a viable solution for producing metadata in the form of descriptions or titles for digital collections within the Libraries and Cultural Resources (LCR) at the University of Calgary.

Setting

As with many institutions, the University of Calgary’s Libraries and Cultural Resources has a large number of digital collections; many of which have insufficient descriptive metadata in the form of accurate titles and descriptions. Much of LCR’s digital collections are archival in nature, and have donor agreements that would require us to seek permission to process them through third-party AI services. During COVID-19, LCR was in the process of migrating our digital collections from CONTENTdm to a new Digital Assets Management (DAM) system by Orange Logic (https://www.orangelogic.com/products/digital-asset-management-system). While Orange Logic’s DAM offers third party AI services from Google and Microsoft for keywords generation, facial recognition, and flagging offensive content, but it does not offer image captioning as part of its AI services. As we looked to AI to help improve and potentially automate some aspects of metadata creation for our digital collections, project Sheeko offered a potential solution in the form of a local open-source software for producing captions that we hoped could be used to create descriptions or titles for images; particularly within some of our larger collections.

Potential Corporate Service Solutions for AI Images in Processing

Before delving into the specifics of this case study of testing Sheeko’s locally hosted AI, it is useful to do a brief environmental scan of the solutions that were now available to the University of Calgary, and weigh some of the strengths and weaknesses in relation to metadata creation. Microsoft’s Azure Custom Vision (https://learn.microsoft.com/en-us/azure/cognitive-services/custom-vision-service/overview) “is an image recognition service that lets you build, deploy, and improve your own image identifier models” (Farley et al. 2022). There is one major difference between Microsoft Computer Vision and Custom Vision services. Custom Vision gives users the ability to specify labels to be used on objects within images and train models to detect those objects, whereas Computer Vision offers users a fixed “ontology of more than 10,000 concepts and objects” that can be applied to images (Computer Vision . . . c2023). Computer Vision also offers a suite of other services including Optical Character Recognition (OCR), and Facial Recognition services that are also useful for the discovery of images in digital collections but not addressed in this article. While Azure’s Computer Vision (https://learn.microsoft.com/en-us/azure/cognitive-services/computer-vision/overview) does offer a service for captioning as part of the Cognitive Services suite, this product is not included in Orange Logic third party services and would require further customization. Google Vision (https://cloud.google.com/vision) allows users to “assign labels to images and quickly classify them into millions of predefined categories. Detect objects, read printed and handwritten text, and build valuable metadata into your image catalog” (Vision AI … n.d.). LCR does plan on testing facial recognition technology, and the auto tagging of images with keywords in the future, but neither were useful on their own in deriving standard descriptions or titles we presently wanted. Both Microsoft and Google services require the images to be sent via an API to their services, then processed and the results returned, which takes time and processing offsite. Were this processing to be done on site with a local machine, image data could be processed at a higher volume with improved speed. Additionally, both Google’s and Microsoft’s services have a cost associated, though the costs for these services are not substantial enough to be a deterrent from using these services [1][2]. The biggest strength of these third party services offered via Orange Logic is their ease of use, their integration with the digital collection platform, and their ability to recognize discrete objects or people and then auto-tag images. However, neither of these services offered the more robust solution of generating multiple words used in captions that are needed for the description of images, or their titles. On the other hand, Sheeko did potentially offer a captioning solution.

Open-source local solution for image AI Sheeko

Sheeko is a locally-hosted AI software environment developed by a team from the University of Utah’s J. Willard Marriott Library, consisting of Harish Maringanti (Associate Dean for IT & Digital Library Services), Dhanushka Samarakoon (Assistant Head, Software Development), and Bohan Zhum (Software Developer). Sheeko applies machine learning models to image collections to detect objects, and generate captions. Sheeko is built on an open-source software environment whose components are listed or available for download via GitHub. Sheeko provides potential users three options of how to use the environment, as described in the “Training a Model” and “Sheeko Pretrained Models Resource” sections of the README in the GitHub repository (Samarakoon and Zhu 2021). Sheeko users can train their own model (using Inception v3 model), fine tune an existing model (using the im2txt model), or use one of seven pre-trained models available for download on their website (Maringanti et al. n.d). However, before embarking on this process, users must install the package either in VirtualBox or by downloading the code package and additional prerequisite software.

The intent of the testing of Sheeko at the University of Calgary was to ascertain if training a model, or deploying a pre-trained model of Sheeko was a feasible answer to the need for description and title metadata required for image collections that were currently undescribed. The test at the University of Calgary was carried out in 2020 during COVID-19, while most staff, myself included, were working from home. An initial attempt to install the VirtualBox was unsuccessful, and I asked our Technology Services staff for assistance.

Because the virtual box environment is supported using vagrant and requires that users enable BIOS mode, and this work was to be performed on a University managed laptop currently in my home, with remote assistance from Technology Services, the use of the Virtual Box was not an option. Therefore, a local install of the code package was the chosen method. To deploy Sheeko locally, there are specific hardware configurations required, and a dozen code package dependencies as listed in the Catalyst white paper and in the getting started section of their Github (Maringant et al. 2019; Samarakoon and Zhu 2021). Of the many system perquisites required there are specific GPU and driver installations, and a knowledge of Python scripting at more than an introductory level. These tasks took a number of weeks for Technology Services staff to work through. Once the software was installed we decided to test two pre-trained models on a set of 114 images. All of the pre-trained models available for download from project Sheeko use Tensorflow’s “Show and Tell” model as a base model which is refined to different specifications in each of the seven models available. Tensorflow is an “end-to-end open source platform for machine learning” (Tensorflow n.d.). The “Show and Tell model is a deep neural network that learns to describe the content of images” (Kim et al. 2020). This case study used two models: the MS COCO model (ptm-im2txt-incv3-mscoco-3m) that was trained on images from Microsoft Common Objects in Context; and Marriott model (ptm-im2txt-incv3-mlib-cleaned-3m) that was trained on historical photographs from the Marriott Library’s digital collection. The main goal for the testing was to see if either of these models would be suitable for any of a variety of collections ranging from historical to more contemporary photographs. In the vein of more scientific experiments, we wanted to keep as many variables as possible constant across the two models. Both the selected pre-trained models were previously trained with the Show and Tell model, with a starting point of Inception v3 and 3 million steps. The two major differences between the models were the images used for the training, and that the Marriott model went through natural language processing and cleaning to remove proper nouns.

Image Sample Selection

To ascertain the strengths and weaknesses of the two Sheeko models selected, and to see which model performed best for images with certain qualities or objects, a cross section of 114 images from large digital collections that were lacking descriptive titles was selected. This included Images from the Glenbow Library and Archives (https://digitalcollections.ucalgary.ca/Package/2R340826N9XM), featuring largely historical images about Western Canada and Alberta’s provincial history and settler life, dating from the late 1800s onward. Images from our Mountain Studies collection (https://digitalcollections.ucalgary.ca/Package/2R340822NL8R), were of the national parks in the Canadian Rockies and featured mountains, landscapes, and wildlife. Images from the Calgary Stampede collection (https://digitalcollections.ucalgary.ca/Package/2R3BF1GBVDJ), were again Western themed but more contemporary and with a focus on horses, cowboys and fairgrounds, dating from the 1960s onward, though the collection itself contains images from 1908 onward. Images from EMI Music Canada Archive (https://digitalcollections.ucalgary.ca/Package/2R340822NOZP), were of music objects including tapes, records, CDs and other carrier cases. Images from the Nickle Galleries were of a few art pieces, and currently not available to the public. Images from the University of Calgary Photographs collection (https://digitalcollections.ucalgary.ca/Package/2R3BF1SQXF7JW) featured university buildings from 1950s onward. The remaining images were taken from the Alberta Airphotos collection (https://digitalcollections.ucalgary.ca/Package/2R34082235HO), the Arctic Institute of North America Photographic Archives collection (https://digitalcollections.ucalgary.ca/Package/2R3BF1SS8HHXX) and the Winnipeg General Strike collection (https://digitalcollections.ucalgary.ca/Package/2R340822NUM1).

Ranking system for Sheeko captions

When Sheeko runs a model to generate captions for images, each model will output a json file that contains the all file names of the images processed, the three captions generated for each file name, and a confidence rating for each caption. A higher confidence rating did not necessarily correspond to the captions chosen as the best fit. While the json output is useful, it does mean that users must view the files separately from the captions, which is not user friendly or helpful when one is trying to assess the best captions for an image. One of the Library Technology Support Analysts, Karynn Martin-Li, at Libraries and Cultural Resources had the brilliant idea of building a viewer that would display the image and the three captions from each model tested. The custom viewer made the process of selecting the appropriate caption from each model much more user friendly. This was custom built by Martin-Li and not included in Sheeko, but this created an interface and made it much easier to use.

{"file_name": "glenbowid21521.jpg",

"captions": [{"caption_text": "a black and white cow standing in a field.",

"score": 0.0012332111498308891},

{"caption_text": "a black and white photo of a cow standing in the snow.",

"score": 0.0004844283302840979},

{"caption_text": "a black and white cow standing in a field",

"score": 0.00048442775066202847}]},

{"file_name": "glenbowid21745.jpg",

"captions": [{"caption_text": "a black and white photo of a city street.",

"score": 0.0016210542383307202},

{"caption_text": "a black and white photo of a city street",

"score": 0.000650292656660278},

{"caption_text": "a black and white photo of a city bus",

"score": 0.0004501958654109137}]},

{"file_name": "glenbowid21841.jpg",

"captions": [{"caption_text": "a black and white photo of a city street.",

"score": 0.001934344178677972},

{"caption_text": "a black and white photo of a city street",

"score": 0.0014626184147026346},

{"caption_text": "a black and white photo of a building",

"score": 0.0007475796404555153}]},

For all 114 images processed, one of the three captions for each image was selected as the best fit from both the MS COCO model and the Marriott model. The selected captions from each of these models were ranked on a scale of zero to five (zero being the lowest quality caption, and five being the highest quality caption). Ranking the captions was based on three variables: the identification of the subjects or objects present in the image (0-2 points), the background or setting of the image (0-2 points), and the image type (0-1 points). A total rank of three or above is something that would be considered usable as a base for a description. Examining a few captions from each of the models will illustrate how the rank system worked.

Observations of Sheeko’s captions

The photograph titled “’Canso’ at head of Coronation Fiord” in the University of Calgary Arctic Institute of North America collection was given rank of five for MS COCO model’s caption “a black and white photo of a plane in the water” (Fig.1). The MS COCO caption has appropriately described the type of photo as black and white, and the object of an airplane, and the background setting of water. The Marriott model’s caption for this image was assigned a rank of one for its caption “photo shows a helicopter being used like a crane to transport a tower during construction of a lift”. This caption accurately describes the type of image as a photo, but does not correctly identify the object, or background in the image.

Figure 1.

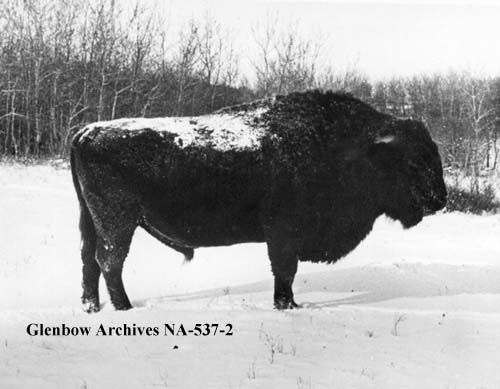

The image titled “Cattalo, male, named ‘King’ in Buffalo National Park near Wainwright, Alberta.” in the Glenbow Library and Archives digital collection received a rank of 4 for the MS COCO model’s caption “a black and white photo of a cow standing in the snow” (Fig.2). This caption correctly identifies the type of photo as black and white, but description of the objects is only partially accurate. The animal in this image is a cattalo, a cross between a cow and a buffalo, granted it was anticipated that no AI model has been trained on cattalos at this point. But nonetheless the animal does appear much like a buffalo, and so the caption is half correct when it categorizes the subject as a cow. The background is also only partially correct as the animal is standing in the snow, but there is no mention of a field, pasture, or trees present in the background. The Marriott caption for this image was “photo show a cow grazing” ranked at a score of 2, as the type of image being described as photo is correct, but lacking the descriptors of black and white. The subject of a cow is half correct, and the animal is not grazing, and no background is mentioned.

Figure 2.

For the postcard titled “Dog Team in Heavy” by photographer Byron Harmon, in the Mountain Studies digital collection, both models produced captions with a rank of 1 (Fig.3). The MS COCO model generated the caption “picture of a vase of flowers on a table”, correctly identifying only the image type as a picture, and being incorrect about the dogs and man in the postcard, as well as the setting of a snow covered forest. The Marriott model produced the caption “artwork a person” and received a rank of 1, as the image is an artwork but the artwork is a photograph so there were no points given for the type of image. The model did correctly identify one of the subjects as a person, but does not mention the dogs, nor does it describe the background at all.

Figure 3.

The overall results were that the MS COCO pre-trained model performed significantly better, with 53% of the images having a score of 3 or above. The Marriott Library pre-trained model had only 15% of the images with a score of 3 or above. A full breakdown of the results can be seen in Table 1.

| Rank | MS COCO count of rank | Marriott count of rank |

|---|---|---|

| 0 | 22 (19 %) | 58 (50 %) |

| 1 | 10 (9 %) | 25 (21%) |

| 2 | 21 (18 %) | 16 (14 %) |

| 3 | 29 (25 %) | 13 (11 %) |

| 4 | 24 (21 %) | 1 (< 1 %) |

| 5 | 8 (7 %) | 1 (< 1 %) |

| Total Images processed | 114 | 114 |

Of the images processed, both models performed poorly on images from EMI Music Canada Archive, the Nickle Galleries, and the University of Calgary Photographs collections, which contain particular types of objects such as cassettes, records, artwork, and portraits. From these results it was determined that Sheeko would not be a good fit for images that require the identification of a particular location or images requiring individuals to be named. The models performed the best on images from Calgary Stampede collection and Glenbow collections where it was describing more general objects such as groups of people, buildings, animals, or landscapes. Were further testing to be done using Sheeko, the MS COCO model would be used as a basis for further training.

In the white paper report written by Sheeko’s creators, “Machine learning meets library archives: Image analysis to generate descriptive metadata” they state MS COCO models and other existing models “were developed with a focus on born-digital photographs of everyday objects and scenarios (Maringanti et al. 2019). These models contained objects that were not present in historical photographs” (Maringanti et al. 2019). The misidentification of certain subjects or objects was supported in this case study. It was clear that both models were trained on certain types of object and subject categories, for example horses and cows, but not bison, buffalo or cattalo. When the models see a cattalo it will be described as a horse or a cow, and not a bison. Similarly, neither model produced useful captions for cassettes which the MS COCO model identified as a Nintendo Wii game controller in one case, and the Marriott model described as an artwork. To train a model to identify a particular object category not currently included in that model, such as a bison or a cassette, 10,000 images of that object need to be processed from various angles. Training the AI models does not require a large time investment in processing, but would require a significant time investment in the form collection analysis and image identification to identify the subject and object categories required for training the models, and gathering the images to train a model.

Conclusion

The goal of this case study was to test two of Sheeko’s pre-trained machine learning models to ascertain if they would produce captions that could be used as descriptions or titles, and thereby reduce the amount of time and labor required by staff to describe images in the University of Calgary’s digital collections. Sheeko’s results show that it does produce captions that could be used as a basis for descriptions, with moderate human intervention. The captions would not be suitable for title creation without significant human intervention. Were Sheeko to be used for description generation, it is unclear if the amount of time required to select the most appropriate caption from the three choices generated and then create additional information, or modify existing description with information from the Sheeko generated captions, would be less time than it would take for a person to create description metadata from scratch. At the end of this case study it was determined that Sheeko would not be pursued as a potential AI solution for creating descriptive metadata at this time. The stand alone results from the MS COCO model, though significantly better than those from the Marriott model, only yielded usable captions for 53% of the images. The potential time commitment and resources required from personnel to train models to identify the type of objects and subjects in LCR’s digital collections without descriptions, or with minimal descriptions, were disproportionate to the quality of the results in this case study. The fact that deploying Sheeko, and any further training of the models in Sheeko would have leaned heavily on the skills of staff in LCR’s IT, and required time to create models and source the images needed for modeling were all deterring factors in pursuing Sheeko. This case study was initially designed as a research project for myself, the Digital Metadata Librarian; and Technology Support were to enable this initiative where and when necessary. The reality was that this project was in many ways led by LCR’s IT, to whom I am most grateful.

This case study took place while our Digital Services Department was in the process of migrating from our old digital collections software CONTENTdm to our new Digital Asset Management system by Orange Logic. Now that we have completed the migration, and are more familiar with Orange Logic, it is time to test the capabilities of the built-in third-party AI services, and explore other captioning solutions. Sheeko presented a potential solution for describing a large amount of content locally, without the use of third-party providers, their services fees, or the processing time associated with them. Sheeko and local open-source environments also eliminate the need to pursue seeking permissions and re-writing donor agreements as the images are not processed outside of the home institution, also mitigating any potential data sovereignty issues. A local software solution similar to Sheeko may still be useful in filling a gap in AI image description present in our new digital asset management system. The cost of Sheeko was not a monetary services fee, but rather the cost of wages and valued staff time of our Technology Services staff to do the manual set-up of the software environment needed to run Sheeko, and build additional customization in the form of a viewer to facilitate ease of use. Ultimately Sheeko’s cost was great enough that it has been eliminated as a potential solution for large-scale image captioning at present.

Notes

[1] The price list for Azure cognitive services, including Computer Vision is available at: https://azure.microsoft.com/en-us/pricing/details/cognitive-services/. The Describe service is listed at $1.50/1,000 transactions when processing 0-1 million transactions. After 1 million transactions it is $0.60/1,000 transactions. If Libraries and Cultural Resources were to process all 2,241,793 image in our collection it would cost $2,245.

[2] The price list for Google Vision services is available at: https://cloud.google.com/vision/pricing/ The price for the majority of services, with the exception of Web Detection and Object Localization is $1.50/1,000 transactions when processing 0-5 million transactions. If Libraries and Cultural Resources were to process all 2,241,793 images in our collection, using any one of Google Vision’s services it would cost $3,362. However, Google Vision does not offer captioning.

About the Author

Ingrid Reiche is the Digital Metadata Librarian with Libraries and Cultural Resources at the University of Calgary. Ingrid works largely with Digital Collections and is hopeful that AI can supplement human metadata creation to help describe the growing digital content in academic libraries.

Bibliography

Maringanti H, Samarakoon D, Zhu B. (2019). Machine learning meets library archives: Image analysis to generate descriptive metadata. LYRASIS Research Publications. https://doi.org/10.48609/pt6w-p810

Maringanti H, Samarakoon D, Zhu B. n.d. Sheeko: A computational helper [Internet]. Atlanta (GA): Lyrasis; [cited 2023 Feb 17]. Available from: https://sheeko.org/

Samarakoon D, Zhu B. [updated 2021 Feb 17]. sheeko-vagrant. San Francisco (CA): GitHub; [cited 2023 Feb 17]. Available from: https://github.com/marriott-library/sheeko-vagrant

Farley P, Buck A, MacGregor H, McSharry C, Downer R, Coulter D, contributors. 2022 Nov 22. What is Custom Vision? [Internet]. Redmond (WA): Microsoft; [cited 2023 Feb 17]. Available from: https://learn.microsoft.com/en-us/azure/cognitive-services/custom-vision-service/overview

Computer Vision [Internet]. c2023. Redmond (WA): Microsoft; [cited 2023 Feb 17]. Available from: https://azure.microsoft.com/en-us/products/cognitive-services/computer-vision

Vision AI [Internet]. n.d. Mountain View (CA): Google; [cited 2023 Feb 17]. Available from: https://cloud.google.com/vision#section-3catalog

Tensorflow [Internet]. n.d. [cited 2023 Feb 17]. Available from: https://www.tensorflow.org/overview

Kim J, Murdopo A, Friedman J, Wu N, contributors. [updated 2020, Apr 12]. Show and Tell: A Neural Image Caption Generator. San Francisco (CA): GitHub; [cited 2023 Feb 17]. Available from: https://github.com/tensorflow/models/blob/archive/research/im2txt/README.md

Subscribe to comments: For this article | For all articles

Leave a Reply