Introduction

In 2001, Yale University scanned the full run of Washington, D.C. alternative weekly newspaper Washington City Paper (WCP) to microfilm, and provided digital surrogates to DC Public Library (DCPL) as a professional courtesy. At that time, DCPL had neither dedicated staff nor infrastructure to manage digital materials in a meaningful way, thus the collection remained largely dormant until 2014, when the Special Collections division (now named People’s Archive) both hired its first Digital Curation Librarian and launched its digital collections platform, Dig DC. Even then, aside from occasional reference requests, use of the digital WCP was limited due to there being no formal agreement between DCPL and the original publisher.

Two key shifts occurred in swift succession that led to movement on the WCP project. Firstly, DCPL reached an agreement with publishers of the historic gay newspaper Washington Blade to digitize from microfilm, describe, and make available all issues of the paper from 1969 to the present day. The project is still ongoing, but is considered a success by both parties. Thus, when the opportunity to have formal discussions with representatives of WCP presented itself, DCPL had in place not only a model for how the work might be completed, but also a legal framework to allow the publisher to retain copyright, and also confer generous rights grantor privileges to the library. DCPL reached a similar agreement with the publishers of WCP in 2020, and planned for the collection’s debut to coincide with the 40th anniversary of the paper in 2021.

Secondly, the COVID-19 pandemic, while catastrophic, forced DCPL to reevaluate its telework policies and revise them for the better, at least temporarily. Library staff required projects that could be completed remotely, and descriptive metadata work was deemed appropriate considering the situation. It should be noted that staff experienced significant anxiety about both their health and employment status at that time due to the impending recession and worsening coronavirus spread, and that this project, as conceived, was designed partly to remove one element of friction from the workdays of people who were under unusual and understandable levels of stress. That element of friction was, in short, the selection of subject headings and name authorities.

DCPL’s previous work in the authoring of descriptive metadata for digital objects was not necessarily seen to be lacking, merely time-consuming. To illustrate, consider the workflow for the Washington Blade project: the project manager provides a batch of PDF issues and MODS template to another staff member; many fields are pre-filled, but that staff member creates extensive entries for the item description field, and browses the Library of Congress (LC) Linked Data Service to select a set of relevant subject headings for each issue in the batch. Each issue must be read in detail, and a great deal of time must be spent exploring the LC site. With the 40th anniversary of WCP approaching quickly, the question arose: what could be done to speed up this process? Could “Subject” become one of those pre-populated fields?

Workflow

DCPL’s People’s Archive digital unit had long been interested in harnessing, in some way, the OCR text that is automatically generated when an appropriate item is ingested into Dig DC (an Islandora 7 repository). The period of confusion between the beginning of the pandemic and the now-familiar rhythm of telework created an opportunity to step back from normal responsibilities and, with distance, conceive an approach that could generate Library of Congress Subject Heading (LCSH) and Library of Congress Name Authority File (LCNAF) matches from the OCR text of a newspaper issue. The workflow that was developed follows.

Since DCPL does not usually perform OCR until an object, or set of objects, is ingested into Islandora (typically the final stage of a digital collections project), and our goal was to assist with descriptive metadata creation (one of the initial stages), we opted to run Tesseract on the collection in bulk before any other work was done. We decided to focus only on the cover pages of each issue for two reasons: one, it is much simpler and computationally less demanding to run Tesseract on a single folder containing one TIFF per issue than it is to do the same for a series of nested folders containing complete editions (which are around 80 pages each); and two, the covers are in general more succinct and focus largely on the unique content for that issue, which we hoped would result in more accurate subject headings. Since the digital issues utilized a nested folders-within-a-folder structure, we wrote a Python script to extract files with names that matched a pattern shared by all issue covers and place them into a single directory. This resulted in a folder containing 950 images, and consequently 950 files of OCR text.

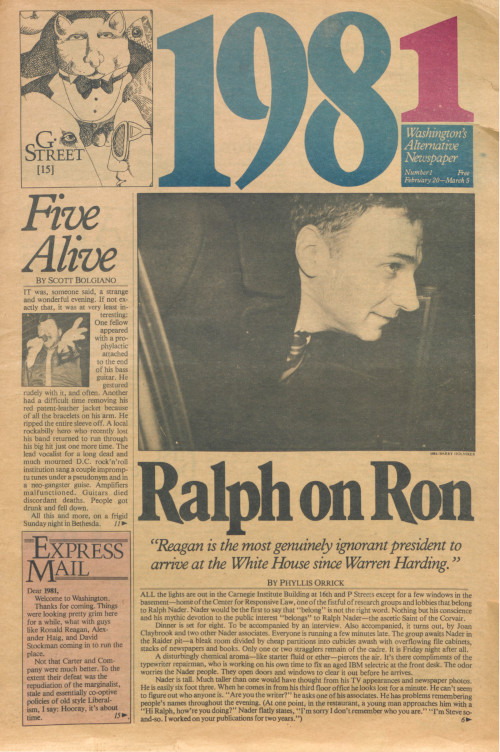

Figure 1. The cover for the first issue of the publication, which was simply titled “1981” before adopting its current title of “Washington City Paper”.

Figure 1. The cover for the first issue of the publication, which was simply titled “1981” before adopting its current title of “Washington City Paper”.

The next step was to extract commonly-used words in those files, and we identified Python’s Natural Language Toolkit (NLTK) as the most appropriate tool for the job. We created another Python script to iterate through the text files, produce a cleaned version of each, and apply NLTK to the cleaned versions to output a list of ten most frequency encountered terms to an additional set of text files. The resulting top ten rankings were then combined into a single CSV featuring two columns (“filename” and “content”) with a final Python script. While the process technically worked from the beginning, results were not initially usable because NLTK’s default list of 127 English stopwords used in the text cleaning process is simply not extensive enough. Ultimately, we employed an expanded list of 1,160 stopwords compiled from multiple sources.

Lastly, the CSV was brought into OpenRefine and cleaned up so that each term to be matched against LC occupied a single cell. We then used Christina Harlow’s LC OpenRefine Reconciliation Endpoint to reconcile the text in each column, and imported high percentage LCSH and LCNAF matches into the MODS templates used by our metadata workers.

Results and analysis

We were not able to fully pre-populate the Subject field. That said, this process identified 1,972 LCSH and LCNAF matches for 950 issues, and 525 of those matches (roughly one quarter) were selected as relevant to the content of the issues being described. We found that terms with a certain level of specificity resulted in more useful matches (consider the following examples that were included in the final collection metadata: “Vodka“, “Nicaragua“, “Rent“, “Preservation“, “Gambling“, “Radio“, “Squirrels“, “Poetry“, “Christmas“, “Assassination“, “Interferon“, and – the author’s personal favorite – “Leprechauns“; versus “American” and “Home“, which were not).

One element of this workflow that was not particularly successful and that could be iterated upon in future implementations is the focus on identifying single words instead of terms for reconciliation against LC. To illustrate, this solution in no way accounts for terms like “American Ambassador” (which in one instance was split into “American” and “Ambassador,” both reconciled against LC separately), “Infant formula” (which was treated as “Infant” and “Formula.”), or “Enola Gay” (which was treated as “Enola” and “Gay”). While terms were technically selected, the original meanings were altered due to this limited approach.

Unfortunately, we have little to no data to determine how this work impacted the quality of life of our metadata creators. What is obvious, however, is that their work cannot be easily replicated, merely supplemented; NLTK, for all of its strengths, cannot match the creativity, cognition, and intuition of library workers and their abilities to read contextually and make judgement calls. The process of computationally identifying subjects and name authorities certainly adds value to the collection, and may save workers small amounts of time, but whether that time saved is statistically significant is debatable. The author believes, however, that there is further work to be done here and that there is great potential to improve upon these results in future projects.

The Washington City Paper digital collection is a work in progress, and is available on Dig DC.

About the Author

Paul Kelly (paul.kelly2@dc.gov) is Digital Initiatives Coordinator for the People’s Archive at DC Public Library. He holds his M.S. in Library and Information Science from the Catholic University of America and M.A. in English Literature/Film and Television Studies from the University of Glasgow. His professional interests include collections as data, web archiving, and digital preservation.

Subscribe to comments: For this article | For all articles

Leave a Reply