by Athina Livanos-Propst

Introduction

In 2018, PBS LearningMedia (an online destination offering free access to thousands of classroom-ready resources, made possible through a partnership between PBS and WGBH) took on a project to review and refresh its library of teacher-focused, born digital materials. What follows is an overview of the process that was undertaken by PBS LearningMedia. It is possible for organizations of any size to adapt the process to their own non-archival digital collections. The software utilized are generally accessible and open to librarians at many different levels of technical expertise.

PBS LearningMedia was launched in May of 2011. At launch, the site’s goal was to make PBS’s media and teacher materials accessible in one digital destination, as well as to distribute high-quality educational resources from PBS member stations and other organizations. These materials included full length videos, clips, documents, still images, games, and lesson plan. The editorial standards and policies when the site was founded were carefully crafted to ensure that the collection would be robust and beneficial to patrons. Since then, editorial standards have shifted to include a requirement for contextualizing video clips, a desired reduction in linking to external sites, and combining small pieces into larger, more robust teacher materials.

In the summer of 2017, the collection reached a critical mass, surpassing 100,000 available resources. The content team realized that due to the acquisition policy shifts, the full collection was no longer up to the current editorial standard. A full-scale audit of the content collection began to weed out materials that were no longer up to current editorial specifications and to inform stakeholders of the removal of those materials and the reasons for removal. The collection had also never been previously been reviewed with deaccessioning in mind. To that end, a process was developed to review and assess the materials and begin this process for the first time.

Considerations & Rationale

At 100,000 resources, the collection was too large for users to easily navigate and find the high-quality resources needed for their classrooms. A few choices were made at the beginning of the process. The nearly 45,000 resources that were single images would not be assessed. These pieces were used minimally. Therefore, it was decided that select image collections would be removed from search, but still accessible via direct links.

It was also decided that the weeding review would only look at materials that had been added to the collection prior to 2015. Because the acquisition and editorial standards became better enforced around that time, that would be the area of the collection that would likely experience the largest loss during the weeding process.

With that narrowed scope, that left 7,457 resources to be reviewed in the humanities and 6,827 in STEM fields (science, technology, engineering, and math).

The process that will be outlined here is focused exclusively on what was done to review the humanities materials. The STEM materials were reviewed by a partner organization and followed a different process. Organizations wishing to perform their own assessment of born digital content should note that the humanities process is not exclusive to the humanities and could be readily adapted to the sciences.

The process occured in two primary phases. The first would be the development of a rubric tool with which we could assess the content on the site. The second phase would be the actual utilization of those rubrics by members of the content team to perform the assessment itself. The results from phase two would then be shared with the appropriate contributor as a tool for requesting and making changes to bring the resource materials up to the editorial standard.

Design & Iterations

It is vital when undergoing any weeding process to consider your patron community. In this case, our community was educators, which have different user needs than other patron groups. Our first step was to develop an impartial tool to grade each resource that kept the needs of our patron community at the forefront.

Multiple weeding guidelines for physical collections were reviewed (see Further Reading at end). We selected six primary areas to focus on for the structure of the resource review: Accuracy, Currency, Appearance, Relevance, Contextualization, and Usage.

For the purposes of this review and assessment, each of the terms was defined in the following manner:

- Accuracy

- Is the information presented in the resource technically accurate and factually correct?

- Example: Shakespeare was a famous writer in his time.

- Example: Jackson Pollock did large scale, semi-performative abstract paintings.

- Example: An explanation of the sounds letters make to teach chunking.

- Is the information presented in the resource technically accurate and factually correct?

- Currency

- Is the resource balanced? Are there missing perspectives due to when the materials was made? Was this accurate at the time, but no longer reflect current understanding?

- Example: Does the resource state that Lincoln freed slaves, or is a more nuanced approach taken?

- Example: Does the lesson on Uncle Tom’s Cabin include both the inspiration it gave abolitionists as well as the backlash from the African American community?

- Is the resource balanced? Are there missing perspectives due to when the materials was made? Was this accurate at the time, but no longer reflect current understanding?

- Relevancy

- Is this easy for teachers to use? How well is the resource aligned to standards? Does the wording of the intro paragraph aid in the usage of the materials?

- Example: The standards listed under the resource all make sense.

- Example: Resource approaches a standard topic, but via a pop-culture lens to encourage interest in students.

- Is this easy for teachers to use? How well is the resource aligned to standards? Does the wording of the intro paragraph aid in the usage of the materials?

- Appearance

- Does the video quality meet current standards? Is the audio quality intelligible?

- Example: Is the video quality distractingly granulated?

- Example: Are there images of technology or fashion that are so outdated it could cause a distraction from the learning material?

- Does the video quality meet current standards? Is the audio quality intelligible?

- Contextualization

- Does the resource have support materials attached? Are there contextualizing questions and/or activities ‘baked in’ to the primary resource? What is the overall quality of the contextualization efforts?

- Example: The resource has support materials for handouts, quizzes, and discussion questions.

- Example: The resource incorporates a quiz within the media itself.

- Example: Do the support materials call for simple recall tasks or for a larger understanding and interpretation?

- Does the resource have support materials attached? Are there contextualizing questions and/or activities ‘baked in’ to the primary resource? What is the overall quality of the contextualization efforts?

- Usage

- How often has the resource been viewed? How often has the resource been favorited?

- Raw numerical counts based on the information listed on the resource page.

- How often has the resource been viewed? How often has the resource been favorited?

After primary areas of focus had been established, the next phase of the review process was able to begin. This stage involved hiring a series of subject matter experts (SMEs) to make specific rubrics, one for each subject area that PBS LearningMedia covers. These experts were teachers from across the country, who could speak directly to the content needs of our primary patron base.

The SMEs were contracted to deliver three iterations of rubrics during the development process. For each iteration, the subject specific rubrics would be tested by a small group of internal team members. The internal team would grade two resources per subject in accordance with the rubric draft provided. The SMEs were then able to take the grades in front of them and see how they, as teachers, would have assessed the same materials. In this manner, we could test to see that the language for the rubrics both meant something concrete to teachers and was understandable for those outside the education field. We could also be assured that our standards for retaining content were in line with usefulness to our core user base.

All SMEs provided first drafts of rubrics that covered every point of which they could think, both about the quality of the materials and the quality of the site itself. The SMEs threw all potential points on their grading forms. This was both beneficial and problematic. It was beneficial in that it allowed the SMEs to ask all their questions and get a better sense of the limitations of the system. SMEs asked questions about accessibility features that were already integrated into the site, colors, text size and formatting, and similar issues. These systematic issues for the site, while important, were not a part of the resource quality review. It was problematic in that the SMEs had to be strongly course-corrected away from being bogged down by formatting of the site itself instead of classroom focused needs. It was also useful, in that it allowed for detailed notes on site design that were passed along to the usability design team, working on a different, yet parallel, project.

The second iteration of the rubrics was more focused on a resource’s viability in the classroom. The questions brought forward on this rubric draft from the SMEs focused on areas of content and technical specifications that were able to be edited by either the PBS LearningMedia content team or the contributors themselves. In retrospect, it would have been helpful to have the SMEs more fully prepared about the site’s existing specifications and adaptability. That would have allowed for the first draft to be more focused.

The second iteration also saw the largest change in approach to the project overall. At the suggestion of one of the internal team members, we opted to transition from a grading system that allowed for huge amounts of variation to a stripped down three tier option. Each question would be assessed on the simple scale of “Pass,” “Needs Work,” or “Fail.” Resources would either be good enough, fixable, or below our standards.

The third and final iteration of the rubric process focused predominantly on getting our SMEs to write out what “Pass,” “Needs Work,” or “Fail” would look like for each question in their subject area. The descriptions for what was good enough, what a teacher could somewhat work with, and what a failure looked like were highly valuable in communicating educator needs to non-educator content producers. It was this final step that allowed our production teams to know what they were looking for and to more accurately assess if the materials on the site were meeting teachers’ needs.

Once the rubrics were developed, the first major hurdle of the weeding project was overcome. We now had a tool with which to assess the born digital content that would be understandable by any user, while ensuring value to our core patron base.

The rubrics were loaded into a single, combined Google form. The questions that applied to all pieces, regardless of subject matter, were asked first, then a pointer question that led to the the subject-specific questions, with a final section of data metrics and ‘nice to have’ features completing the form. The combined form came in at just under 37 pages. That was spread out over seven subject areas, so each individual resource went through only a small portion of the complete rubric.

Each subject was assigned to an individual with some level of subject expertise in the given area. Library staff with the right backgrounds were pulled in from other projects as well as retaining three of the SMEs from the rubric writing. Each individual was given a list of resources to review that had been published on the PBS LearningMedia platform prior to 2015 and tagged to their designated subject area.

Reviewers were tasked with reviewing what PBS LearningMedia defines as a “single resource page,” that is watching the video(s) that were on each given page as well as any accompanying documentation and teacher support materials. Each review took, on average, 10-15 minutes to complete and enter results into the Google form.

The phrase two review process took four months. During that time, small corrections were made to the form, mostly clarifications of terms and combining and moving of questions. During the first two months of the review, weekly check-in meetings were held. These meetings allowed for consistent interpretation of the rubrics across all team members. After the first two months, the team’s work was consistent and of high enough quality that it was determined that the hour-long meeting time each week could be better spent doing the review work itself.

Assessment

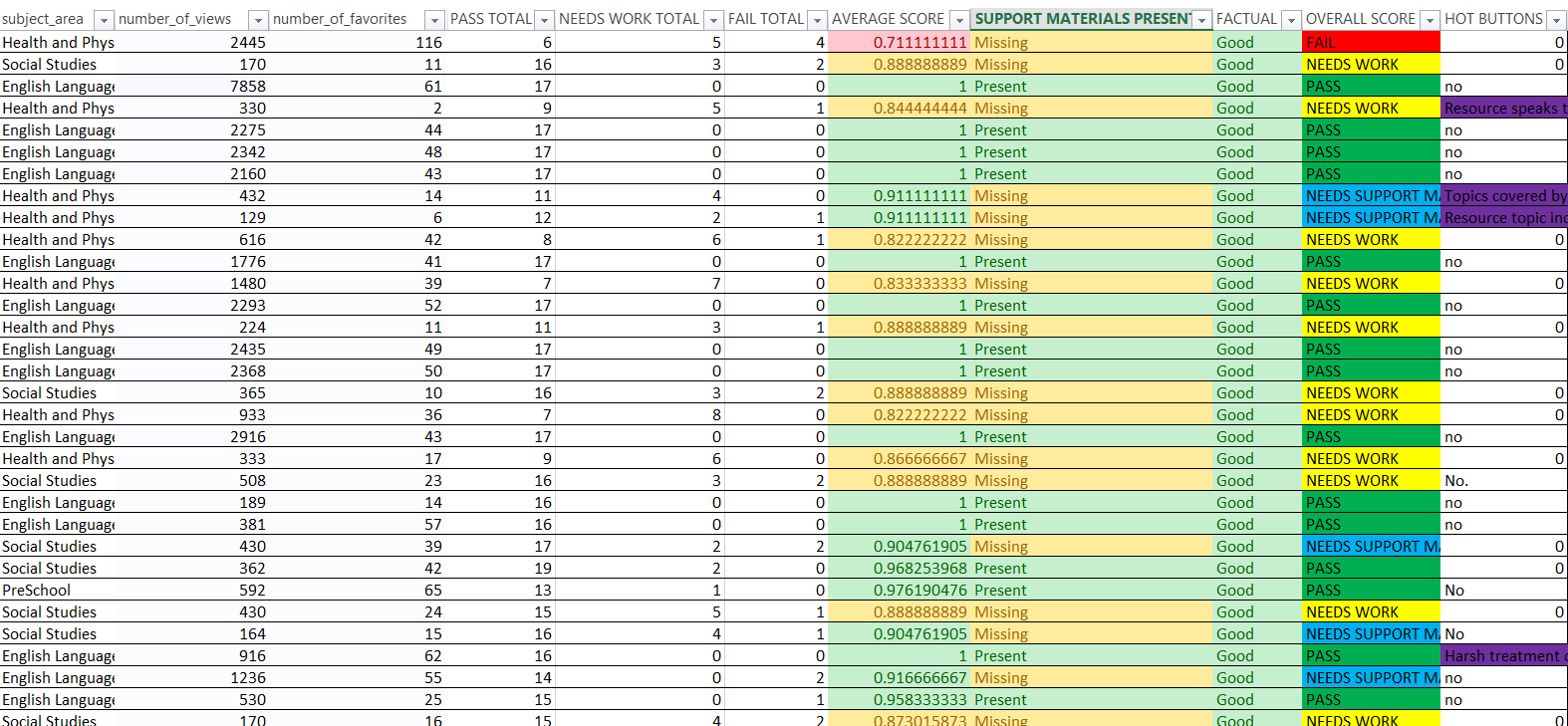

Once the review phase was completed, the task of assembling and assessing the data began. We used the fact that Google Forms automatically outputs to Google Sheets to our advantage. The raw data was downloaded from Google to compile individual sheets for each organization that had contributed to the database.

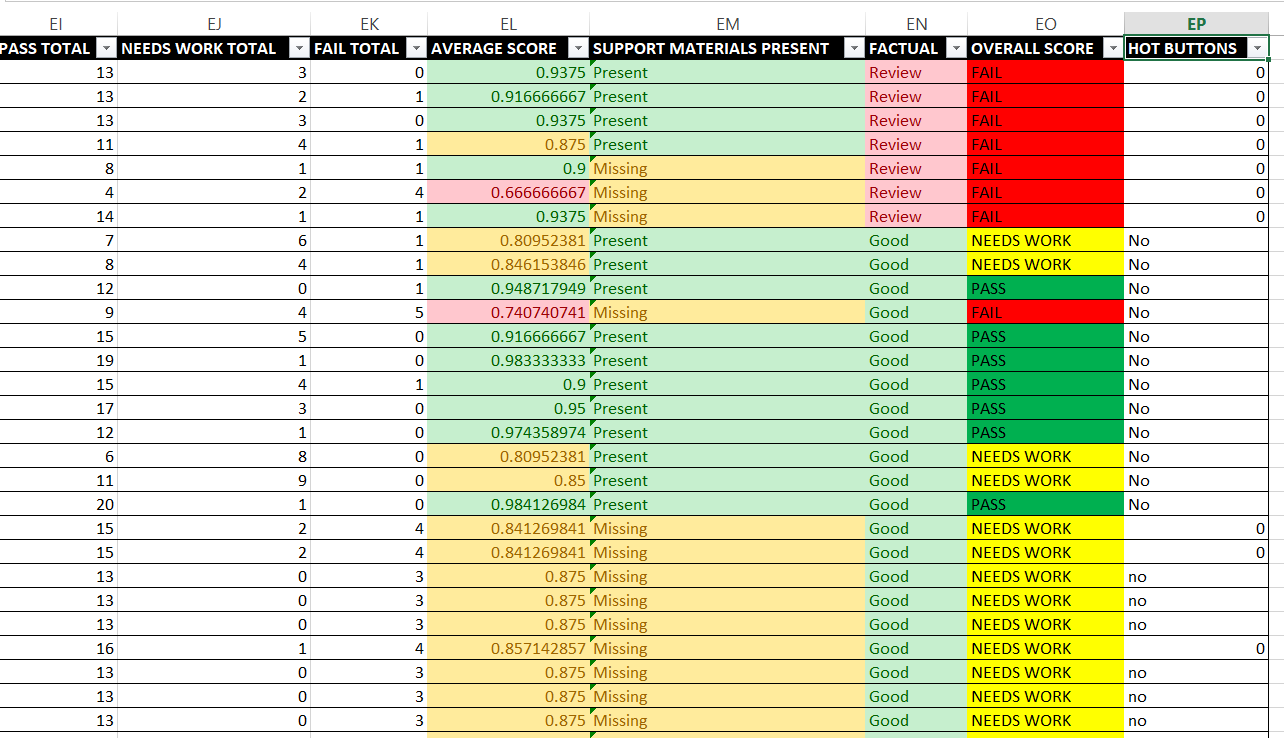

Scores for resources were calculated into the following fields:

- Rubric score

- A simple percentage score based on how the resource performed in every Pass/Needs Work/Fail question answered on the rubric

- Three points were awarded for a Pass, two for a Needs Work, and one for a Fail

- Formula to assess graded areas for each resource line

- =COUNTIF(G2:EI2, “Pass*”)

- Formula to calculate overall percentage score

- =(AVERAGE((EJ2*3)+(EK2*2)+(EL2*1))/SUM(EJ2:EL2)/3)

- Factual

- A check on a question in the universal section of the rubric, designed to ensure that only Pass level factual materials will be approved

- Formula for results check on factual question

- =IF(COUNTIF(P2, “Pass*”)+COUNTIF(AY1, “Pass*”)+COUNTIF(BC1, “Pass*”)+COUNTIF(CC1, “Pass*”), “Good”, “Review”)

- Support Materials Present

- A check on a several cells that checks if the resource has been given support materials in any format

- Formula for check of presence of support materials, multiple questions

- =IF(SUM(COUNTIF(AA2, {“Pass*”;”Needs Work*”}),COUNTIF(AB2, {“Pass*”;”Needs Work*”}),COUNTIF(AK2, {“Pass*”;”Needs Work*”}), COUNTIF(AW2, {“Pass*”;”Needs Work*”}),COUNTIF(BD2, {“Pass*”;”Needs Work*”}),COUNTIF(BG2, {“Pass*”;”Needs Work*”}),COUNTIF(BJ2, {“Pass*”;”Needs Work*”}),COUNTIF(BP2, {“Pass*”;”Needs Work*”}),COUNTIF(CF2, {“Pass*”;”Needs Work*”}),COUNTIF(EC2, {“Pass*”;”Needs Work*”})), “Present”, “Missing”)

- Overall Score

- Pass:

- Rubric score, 90% or above

- Factual, positive result

- Support materials, positive result

- Needs Work

- Rubric score, 80% or above

- Factual, positive result

- Fail

- Rubric score, below 80%

- Factual, negative result

- Formula to assess final score, dependent on above scoring results

- =IF(AND(EM2>=0.89,EN2=”Present”,EO2=”Good”),”PASS”,IF(AND(EM2>=0.89,EN2=”Missing”,EO2=”Good”),”NEEDS SUPPORT MATERIALS ONLY”,(IF(AND(EM2>=0.8,EN2=”Missing”,EO2=”Good”),”NEEDS WORK”,(IF(AND(EM2>=0.8,EN2=”Present”,EO2=”Good”),”NEEDS WORK”,IF(AND(EM2<0.8,EN2=”Missing”,EO2=”Review”),”FAIL”,”FAIL”)))))))

- Pass:

Additionally, all results were color coded, as well as all notes fields in the results panel itself.

Figure 1. A more condensed summary tab was also provided for a quick assessment of the materials.

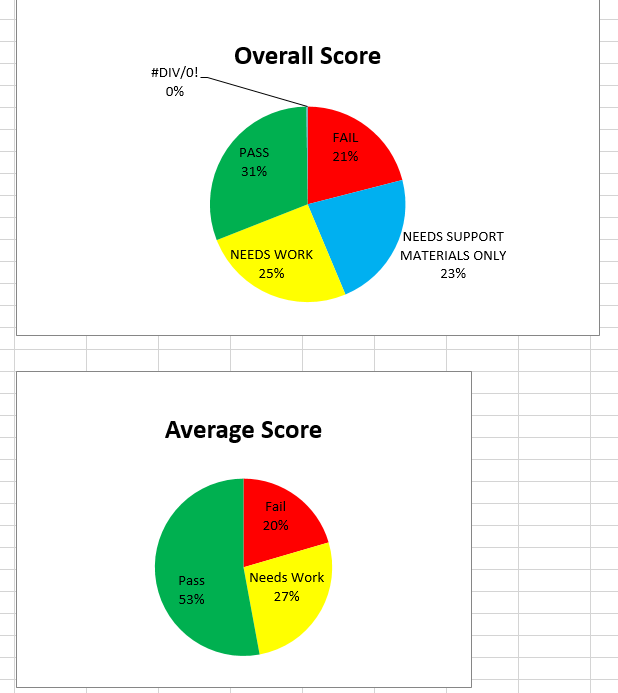

Figure 1. A more condensed summary tab was also provided for a quick assessment of the materials. Figure 2. The data for each score section was also depicted in a series of charts.

Figure 2. The data for each score section was also depicted in a series of charts. Figure 3. Sample view of a the executive summary tab, intended to convey key information to report recipients.

Figure 3. Sample view of a the executive summary tab, intended to convey key information to report recipients. Figure 4. Graphic breakdown of full data, showing the scores in key areas as well as the overall score.

Figure 4. Graphic breakdown of full data, showing the scores in key areas as well as the overall score.The prepared sheets were sent to each contributor who had added material to the site prior to 2015. Even though much of the feedback was that many materials would have to be pulled due to the elevation in standards, contributors were mostly positive in their response.

They readily accepted that their materials would not be hosted indefinitely, and they were open to making now required changes on existing materials. Many contributors were also very thankful to have a list of questions they could ask of their own materials before adding new pieces to the collection.

There were some contributors who took the feedback as an opportunity to reorganize, restructure, or rearrange their existing materials to allow for educational goals to be met. In this way, many resources that originally scored poorly could be spared by combining them together to create a larger narrative that was able to achieve a passing score.

Another benefit of sending out the result forms was that it encouraged several lapsed contributors to become interested in adding to the platform once again. We have seen a spike in potential users signing up for training session about how to utilize the database for uploading new content.

Notes

One of the issues that we planned around was the awareness that our collection numbers would drop significantly. All resources that received a grade of “Fail” would be removed from the website, and all that received a grade of “Needs Work” would be un-indexed in search, but still available to users with a direct link.

We tied the launch of the weeding project results to align with an overall site redesign. The thought was that the materials on the refreshed site would all align to the new acquisition standards. Timed with the new look and feel, both changes could be positioned as the new and improved PBS LearningMedia. This choice proved to be a wise one, as we had minimal reports from users asking about the reduced resource count.

Moving forward, we are continuing to review content that was added to the site after our initial cut off point of January 1, 2015. The current plan is to be able to review materials as they hit three years of age in the system. We have reduced the review team to 1.5 full time staffers, who are able to review an average of 500 resources per month.

We also hope to be able to convert the forms being sent to contributors to an automated form.

About the Author

Athina Livanos-Propst is a Digital Librarian for PBS Education, overseeing the metadata implementation and strategy for PBS LearningMedia. She earned her MLIS from The Catholic University of America, where she specialized in cataloging and special collections. Her primary focus is born digital collections and supporting linked data.

Further Reading

Larson, Jeanette. 2008. CREW: A Weeding Manual for Modern Libraries. Texas State Library and Archives commission [Internet]. [cited 2019 Jan 23]; Available from: https://www.tsl.texas.gov/sites/default/files/public/tslac/ld/pubs/crew/crewmethod08.pdf

Vnuk, Rebeca. 2016. Weeding without Worry. American Libraries [Internet]. [cited 2019 Jan 23]; Available from: https://americanlibrariesmagazine.org/2016/05/02/library-weeding-without-worry/

Subscribe to comments: For this article | For all articles

Leave a Reply